K8S中间件容器化Operator&Helm

- Kubernetes

- 2024-11-13

- 1414热度

- 0评论

K8S中间件容器化Operator&Helm

中间件容器化是指将传统的中间件应用(如数据库、消息队列、缓存、日志服务等)包装到容器中,以便于在容器编排平台(如 Kubernetes)上进行部署、管理和扩展。这一做法可以提高应用的可移植性、灵活性和可维护性,同时能更好地与云原生架构兼容。

容器化中间件的主要优点包括:

-

快速部署与扩展:通过容器,可以实现中间件的快速部署,轻松扩展或缩减资源,支持弹性伸缩。

-

环境一致性:容器可以提供一致的运行环境,避免了开发、测试和生产环境的差异问题,减少“在我机器上能跑”的问题。

-

提高资源利用率:容器的轻量级特性使得同一台物理主机可以运行更多的中间件实例,从而提高资源的利用率。

-

简化管理和运维:通过容器编排工具(如 Kubernetes),可以轻松地管理多实例、多节点的中间件应用,包括自动重启、负载均衡和故障恢复等功能。

-

版本控制与回滚:容器化可以通过镜像版本管理来实现中间件的版本控制和快速回滚,保证系统稳定性。

在进行中间件容器化时,需要注意以下几个方面:

-

持久化存储:中间件常常需要持久化数据(如数据库、缓存等)。在容器化环境中,需要考虑如何实现数据的持久化存储,常见的做法是使用容器卷(volumes)或外部存储系统。

-

高可用与容灾:确保容器化后的中间件能实现高可用性(HA)和容灾能力,通常需要设置多副本、故障转移等机制。

-

性能优化:容器的隔离性可能会引入一定的性能开销,因此需要对中间件的性能进行调优,避免性能瓶颈。

-

安全性:中间件容器化需要确保容器和数据的安全性,特别是在多租户环境下,避免出现权限泄露和数据安全问题。

-

监控与日志管理:容器化中间件的监控和日志收集尤为重要,需要集成如 Prometheus、Grafana、ELK 等工具进行集中的监控和日志管理。

例如,容器化常见的中间件包括:

- 数据库:MySQL、PostgreSQL、MongoDB 等,通过 Docker 容器化运行,并使用持久化存储来确保数据不会丢失。

- 消息队列:RabbitMQ、Kafka 等,这些中间件可以通过容器化实现弹性扩展。

- 缓存:Redis、Memcached 等,可以在容器中快速部署并扩展。

对于将应用部署到K8S集群,需要考虑以下问题:

1、需要了解部署应用的架构、配置、端口号和启动命令等内容

2、镜像的构建和获取

3、找到合适的部署方式,需要考虑应用是否有状态、配置是否需要分离、部署文件来源以及如何部署

4、程序如何被使用,需要考虑协议、是否需要对外提供服务

部署单示例Rabbitmq

通过网络寻找Rabbitmq容器镜像,此处在hub.docker.com上查找,通过单击对应的tags,可以观察到该服务对应的配置文件和暴露的端口,并将此镜像克隆到阿里云对应的镜像仓库内。

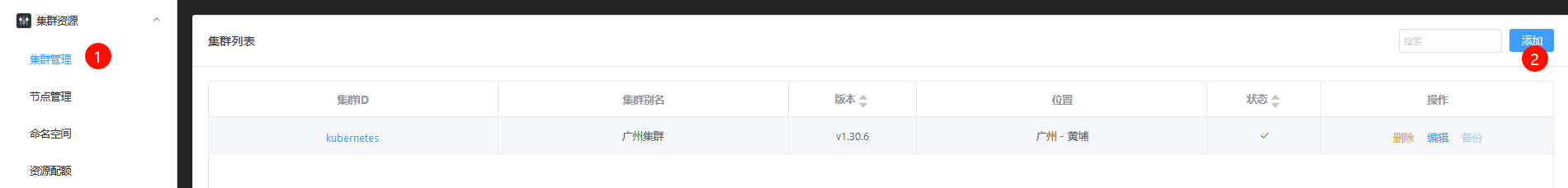

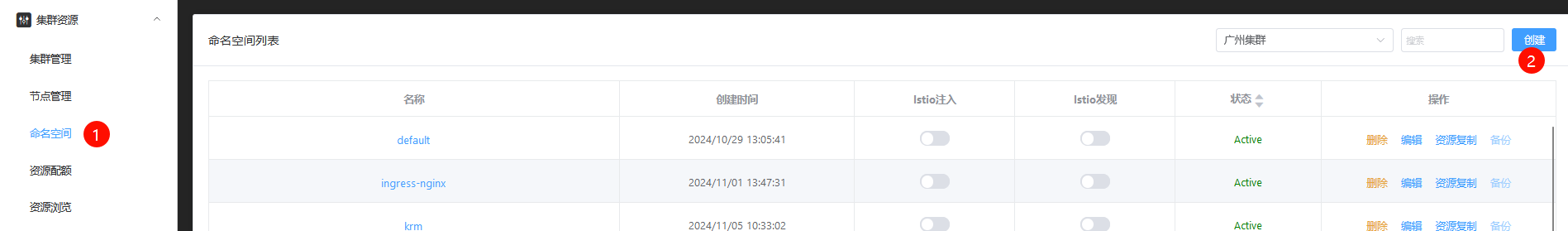

通过KRM管理平台,添加集群和命名空间,左侧导航栏单击集群管理→添加,右侧文本框的内容填写/etc/kubernetes/admin.conf文件的内容

左侧导航栏单击命名空间→创建,在弹出的文本框填写命名空间的名称

左侧导航栏单击调度资源→Deployment→创建,在左上方填写集群名称和选择命名空间,随后在弹出的基本配置界面填写名称字段。

单击容器配置,填写镜像地址和修改最小内存为512Mi

单击端口配置→添加,填写需要映射的端口5672(内部)和15672(外部)

单击健康检查,启动探针和存活探针都选择探测端口,端口号为5672

单击环境变量→添加,注入rabbitmq初始的用户名和密码

所有配置检查无误后,单击下方Yaml按钮,查看生成的配置,确认无误后,单击创建按钮

apiVersion: apps/v1

kind: Deployment

metadata:

name: rabbitmq

labels:

app: rabbitmq

annotations:

app: rabbitmq

namespace: public

spec:

selector:

matchLabels:

app: rabbitmq

replicas: 1

template:

metadata:

labels:

app: rabbitmq

annotations:

app: rabbitmq

spec:

affinity:

podAntiAffinity: {}

nodeAffinity: {}

restartPolicy: Always

imagePullSecrets: []

dnsPolicy: ClusterFirst

hostNetwork: false

volumes: []

containers:

- name: rabbitmq

image: >-

registry.cn-guangzhou.aliyuncs.com/caijxlinux/rabbitmq:4.0.3-management-alpine

tty: false

workingDir: ''

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 1024Mi

cpu: 1

requests:

memory: 512Mi

cpu: 100m

ports:

- name: outside

containerPort: 15672

protocol: TCP

- name: inside

containerPort: 5672

protocol: TCP

lifecycle: {}

volumeMounts: []

env:

- name: RABBITMQ_DEFAULT_USER

value: caijx

- name: RABBITMQ_DEFAULT_PASS

value: Jan16@123

envFrom: []

startupProbe:

initialDelaySeconds: 30

timeoutSeconds: 2

periodSeconds: 30

successThreshold: 1

failureThreshold: 2

tcpSocket:

port: 5672

host: ''

livenessProbe:

initialDelaySeconds: 30

timeoutSeconds: 2

periodSeconds: 30

successThreshold: 1

failureThreshold: 2

tcpSocket:

port: 5672

host: ''

initContainers: []

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%等待一段时间后,单击调度资源→Deployment→查看按钮,可以查看到创建的Deployment资源,需要确保READY状态为1/1

左侧导航栏服务发布→Service→查看→编辑,修改SVC类型为NodePort

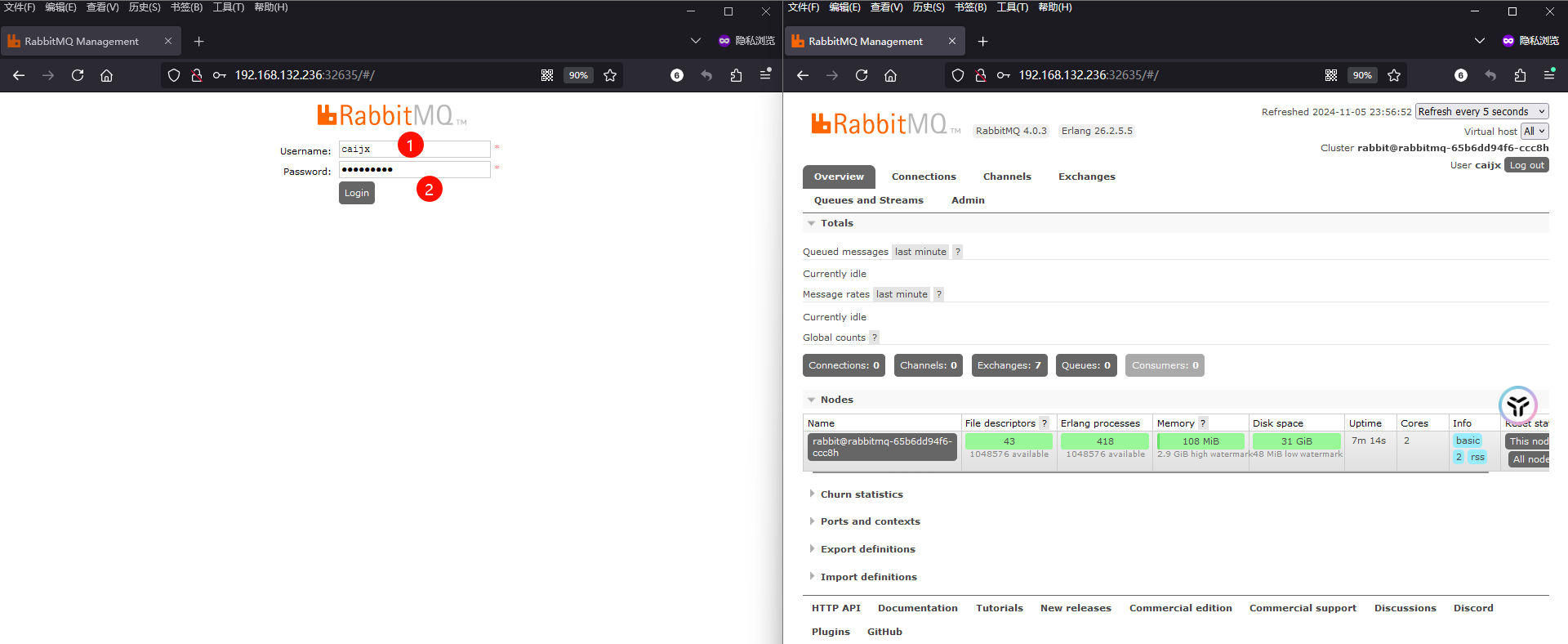

通过NodePort暴露的端口号,打开浏览器,通过IP+端口的方式访问Rabbitmq的Web界面

思考:中间件是否应该部署在K8S集群内?其实取决于多个因素。

1、资源需求:中间件的资源需求(CPU、内存、存储)可能会影响是否选择在 K8s 上部署。如果中间件需要高性能、大规模的计算资源或低延迟,K8s 可能并不是最佳选择,因为容器化可能会带来一些性能开销

2、高可用:K8s 提供了自动恢复和负载均衡的功能,如果中间件对高可用性有较高要求,K8s 可以通过 Pod 的副本管理和自我修复机制来保证系统的稳定性

3、弹性伸缩:K8s 的水平自动伸缩 (HPA) 特性能够根据负载变化动态调整中间件实例的数量,对于负载波动较大的中间件,K8s 可以提供弹性伸缩的优势

4、部署和运维管理:K8s 提供了强大的部署、监控、日志管理和版本管理等能力。如果中间件需要频繁更新、维护或扩展,K8s 会帮助简化这些操作

5、存储/状态管理:一些中间件(例如数据库、消息队列等)可能有严格的状态管理要求。尽管 K8s 提供了 StatefulSet 和 Persistent Volumes 等功能,但对于某些需要严格持久化和数据一致性的中间件,可能需要额外考虑 K8s 的状态管理能力是否能满足需求

6、现在架构和技术栈:如果你的中间件已经在传统的物理机或虚拟机上部署得很好,并且没有太多扩展或弹性需求,迁移到 K8s 可能不会带来太多好处,反而会增加复杂性

Operator部署Redis集群

在 Kubernetes 中,Operator 是一个用来扩展 Kubernetes 功能的模式,它能够帮助我们自动化和管理复杂的应用程序生命周期。Operator 利用 Kubernetes 自身的 API 和控制器,针对特定应用实现自动化运维。

具体来说,Operator 的作用包括以下几个方面:

- 封装领域知识:Operator 能把某些复杂应用(如数据库、消息队列、分布式系统)的运维知识和最佳实践封装成 Kubernetes 的原生资源。这样,K8s 就能通过 Operator 来管理这些应用,不需要人为干预。

- 自动化任务:Operator 可以完成一些常见的自动化任务,比如安装、配置、备份、恢复、扩缩容等。例如,一个数据库的 Operator 可以在需要时自动进行主从切换、备份和恢复。

- CRD(Custom Resource Definition)扩展:Operator 通常会基于自定义资源定义(CRD)来扩展 Kubernetes API,使得应用可以被当成 Kubernetes 中的资源来管理。这些自定义资源(如 MySQL、Kafka 等)可以通过

kubectl来管理,像管理 Pod 或 Service 一样简单。 - 生命周期管理:通过不断监控自定义资源的状态,Operator 可以对应用进行自动化的生命周期管理。当检测到异常时,Operator 可以触发故障修复操作(如重启、扩展、缩容等),确保应用始终处于期望的状态。

克隆Redis的operator仓库

[root@master-01 ~]# git clone https://gitee.com/dukuan/td-redis-operator.git

fatal: destination path 'td-redis-operator' already exists and is not an empty directory.创建Redis Operator和CRD

[root@master-01 ~]# cd ~/td-redis-operator/deploy/

[root@master-01 deploy]# kubectl create -f deploy.yaml

namespace/redis created

customresourcedefinition.apiextensions.k8s.io/redisclusters.cache.tongdun.net created

customresourcedefinition.apiextensions.k8s.io/redisstandbies.cache.tongdun.net created

role.rbac.authorization.k8s.io/admin created

role.rbac.authorization.k8s.io/operator created

rolebinding.rbac.authorization.k8s.io/admin created

rolebinding.rbac.authorization.k8s.io/operator created

clusterrole.rbac.authorization.k8s.io/admin-cluster created

clusterrolebinding.rbac.authorization.k8s.io/admin-cluster created

serviceaccount/operator created

deployment.apps/operator created注意:读者直接创建会提示报错,因为镜像地址是docker.io/tongduncloud/td-redis-operator:latest 。国内无法下载,读者可以使用在云原生章节的克隆方法将镜像同步到国内仓库,下面作者再介绍一种比较折腾的方法。(前提是读者可以科学上网)

用小飞机打开允许局域网访问的选项,查看暴露的端口

本地:[socks:10809]|[http(系统代理):10809] 局域网:[socks:10810]|[http:10811]修改/etc/profile文件,添加https代理和禁止代理的地址,因为直接添加https_proxy字段会导致K8S集群的资源走代理出去,导致无法访问(注意:根本上是为了node节点可以来拉取镜像,有读者只修改master节点,其实根本没有,因为master节点不负责拉取镜像)

[root@node-02 ~]# vim /etc/profile

export https_proxy="http://192.168.132.1:10811"

export no_proxy='192.168.132.169,192.168.132.236,127.0.0.1,localhost'

[root@node-02 ~]# source /etc/profilenode-02节点拉取镜像到指定命名空间下

[root@node-02 ~]# ctr -n k8s.io image pull docker.io/tongduncloud/td-redis-operator:latest

docker.io/tongduncloud/td-redis-operator:latest: resolved |++++++++++++++++++++++++++++++++++++++|

manifest-sha256:d9ca9867216293a9848705efb90e043ceb6071003958912563c80f53e2cde9ae: exists |++++++++++++++++++++++++++++++++++++++|

layer-sha256:983f0dfb6428e884a7160f0cbbf48bb994a20d25978978c823fd1c2c54b35c07: exists |++++++++++++++++++++++++++++++++++++++|

config-sha256:577e38deb41dfdfe12a7ee8058c161680b6ef2b9a01062a55a8ea174ffcbbd53: exists |++++++++++++++++++++++++++++++++++++++|

layer-sha256:801bfaa63ef2094d770c809815b9e2b9c1194728e5e754ef7bc764030e140cea: exists |++++++++++++++++++++++++++++++++++++++|

layer-sha256:9a8d0188e48174d9e60f943c4e463c23268b864ed4f146041bee8d79710cc359: exists |++++++++++++++++++++++++++++++++++++++|

layer-sha256:8a3f5c4e0176ac6c0887cb55795f7ffd12376b33d546a11bb4f5c306133e7606: exists |++++++++++++++++++++++++++++++++++++++|

layer-sha256:3f7cb00af226fa61d4e8d86e29718d08dc7a79bf20c0ed7644f070f880c209e2: exists |++++++++++++++++++++++++++++++++++++++|

layer-sha256:e421f2f8acb5bd8968f6637648db4aa5598f8bb6791054a235deafa7444be155: exists |++++++++++++++++++++++++++++++++++++++|

layer-sha256:f41cc3c7c3e4a187ca9bac01475237c046b1aada6cb3ff3fabcfe2729d863075: exists |++++++++++++++++++++++++++++++++++++++|

layer-sha256:60ffb81ea60a2b424c84500d14bbdbadfb0d989bb7d85224e6b5ba8dcdcd8eae: exists |++++++++++++++++++++++++++++++++++++++|

layer-sha256:a70a91bd8209b692c69e2144f2437140b79882f1f692fe6427e52c8d4d1cfe88: exists |++++++++++++++++++++++++++++++++++++++|

layer-sha256:388b31e85021329323d075d37cde5935fe8c57066ff6e38d68f033f785e4c049: exists |++++++++++++++++++++++++++++++++++++++|

elapsed: 1.9 s total: 0.0 B (0.0 B/s)

unpacking linux/amd64 sha256:d9ca9867216293a9848705efb90e043ceb6071003958912563c80f53e2cde9ae...

done: 17.135682ms随后在master-01修改deploy.yaml文件的镜像拉取策略为IfNotPresent或Nerver即可成功创建资源

[root@master-01 deploy]# kubectl get pods -n redis

NAME READY STATUS RESTARTS AGE

operator-65cb5d57fb-8grnr 1/1 Running 0 13m编辑Redis集群的Yaml文件(注意:镜像无法拉取,请修改为作者的镜像仓库),修改网络类型为NodePort,修改vip为空(注意:不加双引号会提示vip字段不能为空)。文件字段可参考官方WIKI[https://github.com/tongdun/td-redis-operator/wiki/Redis-Cluster%E4%BA%A4%E4%BB%98%E4%BB%8B%E7%BB%8D]

[root@master-01 ~]# cd ~/td-redis-operator/cr

[root@master-01 cr]# vim redis_cluster.yaml

apiVersion: cache.tongdun.net/v1alpha1

kind: RedisCluster

metadata:

name: redis-cluster-trump

namespace: redis

spec:

app: cluster-trump

capacity: 32768

dc: hz

env: demo

image: registry.cn-guangzhou.aliyuncs.com/caijxlinux/redis-cluster:0.2

monitorimage: registry.cn-guangzhou.aliyuncs.com/caijxlinux/redis-exporter:1.0

netmode: NodePort

proxyimage: registry.cn-guangzhou.aliyuncs.com/caijxlinux/predixy:1.0

proxysecret: "123" #代理密码

realname: demo

secret: abc #Redis密码

size: 3

storageclass: ""

vip: ""创建Redis集群,并查看资源状态。读者可以使用describe命令查看redis-cluster-trump内包含两个containerd

[root@master-01 cr]# kubectl create -f redis_cluster.yaml

rediscluster.cache.tongdun.net/redis-cluster-trump created

[root@master-01 cr]# kubectl get pods -n redis

NAME READY STATUS RESTARTS AGE

operator-65cb5d57fb-8grnr 1/1 Running 0 50m

predixy-redis-cluster-trump-56d5846ccc-9vqm6 0/1 Pending 0 71s

predixy-redis-cluster-trump-56d5846ccc-xklgg 0/1 Pending 0 71s

redis-cluster-trump-0-0 2/2 Running 0 90s

redis-cluster-trump-0-1 2/2 Running 0 84s

redis-cluster-trump-1-0 2/2 Running 0 90s

redis-cluster-trump-1-1 2/2 Running 0 86s

redis-cluster-trump-2-0 2/2 Running 0 90s

redis-cluster-trump-2-1 2/2 Running 0 84s此时可以看到负责Redis 集群的请求分发与负载均衡的容器为Pending状态,读者可以使用describe命令看到由于资源不足,容器无法创建,修改Deployment控制器的request和limit字段分别为128Mi和2Gi容器即可正常启动(注意:无法直接修改Pod资源)[在 Redis 集群中,Predixy 是一个高性能的代理,用于管理 Redis 集群的请求分发与负载均衡。Predixy 提供了多种负载均衡算法(包括一致性哈希、随机、轮询等),以及连接池、读写分离、自动故障转移等特性,常用于提升 Redis 集群在分布式架构中的稳定性和性能]

[root@master-01 cr]# kubectl edit deployments.apps -n redis predixy-redis-cluster-trump

resources:

limits:

memory: 2Gi

requests:

memory: 128Mi[root@master-01 cr]# kubectl get pods -n redis

NAME READY STATUS RESTARTS AGE

operator-65cb5d57fb-8grnr 1/1 Running 0 55m

predixy-redis-cluster-trump-599bc6f66b-76kvc 1/1 Running 0 3s

predixy-redis-cluster-trump-599bc6f66b-msk5h 1/1 Running 0 6s

redis-cluster-trump-0-0 2/2 Running 0 6m5s

redis-cluster-trump-0-1 2/2 Running 0 5m59s

redis-cluster-trump-1-0 2/2 Running 0 6m5s

redis-cluster-trump-1-1 2/2 Running 0 6m1s

redis-cluster-trump-2-0 2/2 Running 0 6m5s

redis-cluster-trump-2-1 2/2 Running 0 5m59s[root@master-01 cr]# kubectl get -n redis redisclusters.cache.tongdun.net

NAME AGE

redis-cluster-trump 45m查看集群状态,如果集群可用,phase字段的值为Ready。并且redis-cluster-trump-0、1、2会显示插槽

[root@master-01 cr]# kubectl get rediscluster -n redis redis-cluster-trump -oyaml

apiVersion: cache.tongdun.net/v1alpha1

kind: RedisCluster

metadata:

creationTimestamp: "2024-11-10T18:10:47Z"

generation: 1

name: redis-cluster-trump

namespace: redis

resourceVersion: "833925"

uid: e00d2de4-cf97-4ee0-8b40-9c6c7a74dd46

spec:

app: cluster-trump

capacity: 32768

dc: hz

env: demo

image: registry.cn-guangzhou.aliyuncs.com/caijxlinux/redis-cluster:0.2

monitorimage: registry.cn-guangzhou.aliyuncs.com/caijxlinux/redis-exporter:1.0

netmode: NodePort

proxyimage: registry.cn-guangzhou.aliyuncs.com/caijxlinux/predixy:1.0

proxysecret: "123"

realname: demo

secret: abc

size: 3

storageclass: ""

vip: ""

status:

capacity: 32768

clusterIP: 10.96.99.146:6379

externalip: :32211

gmtCreate: "2024-11-10 18:11:06"

phase: Ready

size: 3

slots:

redis-cluster-trump-0:

- 10923-16383

redis-cluster-trump-1:

- 0-5460

redis-cluster-trump-2:

- 5461-10922查看SVC资源

[root@master-01 cr]# kubectl get svc -n redis

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

predixy-redis-cluster-trump NodePort 10.96.99.146 <none> 6379:32211/TCP 44m

redis-cluster-trump ClusterIP 10.96.243.75 <none> 6379/TCP 44m登录到redis-cluster-trump-0-0容器的redis-cluster-trump-0内,并使用Redis客户端工具验证集群状态

[root@master-01 cr]# kubectl exec -it -n redis redis-cluster-trump-0-0 -c redis-cluster-trump-0 -- bash

[root@redis-cluster-trump-0-0 /]# redis-cli

127.0.0.1:6379>认证密码(yaml文件内定义的Redis密码),查看集群信息和节点状态

127.0.0.1:6379> auth abc

OK127.0.0.1:6379> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:3

cluster_my_epoch:3

cluster_stats_messages_ping_sent:9172

cluster_stats_messages_pong_sent:9111

cluster_stats_messages_meet_sent:5

cluster_stats_messages_sent:18288

cluster_stats_messages_ping_received:9111

cluster_stats_messages_pong_received:9177

cluster_stats_messages_received:18288127.0.0.1:6379> cluster nodes

d7b24c9ffd2cd994df4b657186d57d1dbc82f6b3 172.16.184.39:6379@16379 master - 0 1731265272082 1 connected 0-5460

feae8c04f000742384fac132a3051b60c6278468 172.16.184.48:6379@16379 slave d7b24c9ffd2cd994df4b657186d57d1dbc82f6b3 0 1731265271882 1 connected

bcaea6e301aa828dce74d6f0d563a50d5151c928 172.16.184.43:6379@16379 master - 0 1731265271000 2 connected 5461-10922

343d36f93bbea43efd240d4154df597ec116bb24 172.16.184.2:6379@16379 slave bcaea6e301aa828dce74d6f0d563a50d5151c928 0 1731265271000 2 connected

2779763a6558098dbf93a204c518f671d5b114f0 172.16.184.40:6379@16379 myself,slave 60e2ff82f1850b4aac0d6911d8f0e614dc47f301 0 1731265271000 0 connected

60e2ff82f1850b4aac0d6911d8f0e614dc47f301 172.16.184.41:6379@16379 master - 0 1731265271000 3 connected 10923-16383设置KV,但是提示需要移动节点,移动需要重新认证,写入数据并查看数据

127.0.0.1:6379> set caijx boy

(error) MOVED 1772 172.16.184.39:6379

127.0.0.1:6379> exit

[root@redis-cluster-trump-0-0 /]# redis-cli -h 172.16.184.39

172.16.184.39:6379> auth abc

OK

172.16.184.39:6379> get caijx

(nil)

172.16.184.39:6379> set caijx boy

OK

172.16.184.39:6379> get caijx

"boy"

172.16.184.39:6379> exit通过SVC名称连接Redis集群,访问格式:SVC名称+命名空间,-c参数可以自动跳转,避免手动切换节点

[root@redis-cluster-trump-0-0 /]# redis-cli -h redis-cluster-trump.redis -c

redis-cluster-trump.redis:6379> auth abc

OK

redis-cluster-trump.redis:6379> set xiaocai tongxue

-> Redirected to slot [14632] located at 172.16.184.41:6379 #自动跳转,但是需要重新认证

(error) NOAUTH Authentication required.

172.16.184.41:6379> auth abc

OK

172.16.184.41:6379> get xiaocai

(nil)

172.16.184.41:6379> set xiaocai tongxue

OK

172.16.184.41:6379> get xiaocai

"tongxue"

172.16.184.41:6379> exit通过代理连接Redis集群,可以实现自动跳转和获取其他节点已经写入的数据

[root@redis-cluster-trump-0-0 /]# redis-cli -h predixy-redis-cluster-trump

predixy-redis-cluster-trump:6379> auth 123

OK

predixy-redis-cluster-trump:6379> get caijx

"boy"

predixy-redis-cluster-trump:6379> get xiaocai

"tongxue"

predixy-redis-cluster-trump:6379> set junxian handsome

OK

predixy-redis-cluster-trump:6379> get junxian

"handsome"扩容Redis集群,修改redis_cluster.yaml文件的size字段为4,也可以直接edit redisclusters.cache.tongdun.net 资源

[root@master-01 cr]# vim redis_cluster.yaml

size: 4

[root@master-01 cr]# kubectl replace -f redis_cluster.yaml

rediscluster.cache.tongdun.net/redis-cluster-trump replaced[root@master-01 cr]# kubectl get pods -n redis

NAME READY STATUS RESTARTS AGE

operator-65cb5d57fb-8grnr 1/1 Running 0 3h18m

predixy-redis-cluster-trump-599bc6f66b-76kvc 1/1 Running 0 143m

predixy-redis-cluster-trump-599bc6f66b-msk5h 1/1 Running 0 143m

redis-cluster-trump-0-0 2/2 Running 0 149m

redis-cluster-trump-0-1 2/2 Running 0 149m

redis-cluster-trump-1-0 2/2 Running 0 149m

redis-cluster-trump-1-1 2/2 Running 0 149m

redis-cluster-trump-2-0 2/2 Running 0 149m

redis-cluster-trump-2-1 2/2 Running 0 149m

redis-cluster-trump-3-0 2/2 Running 0 10s

redis-cluster-trump-3-1 2/2 Running 0 7s登录Redis集群,观察扩容是否会对数据产生影响

[root@master-01 cr]# kubectl exec -it -n redis redis-cluster-trump-0-0 -c redis-cluster-trump-0 -- bash

[root@redis-cluster-trump-0-0 /]# redis-cli -h redis-cluster-trump -c

redis-cluster-trump:6379> auth abc

OK

redis-cluster-trump:6379> get caijx

-> Redirected to slot [1772] located at 172.16.184.39:6379

(error) NOAUTH Authentication required.

172.16.184.39:6379> auth abc

OK

172.16.184.39:6379> get caijx

"boy"部署Redis图形化管理工具

[root@master-01 deploy]# kubectl create -f web-deploy.yaml

deployment.apps/manager created

service/manager created

configmap/manager created

serviceaccount/admin created

Error from server (AlreadyExists): error when creating "web-deploy.yaml": clusterroles.rbac.authorization.k8s.io "admin-cluster" already exists

Error from server (AlreadyExists): error when creating "web-deploy.yaml": clusterrolebindings.rbac.authorization.k8s.io "admin-cluster" already exists[root@master-01 deploy]# kubectl get pods -n redis

NAME READY STATUS RESTARTS AGE

manager-5f794f5c-k2pxl 2/2 Running 0 98s

operator-65cb5d57fb-8grnr 1/1 Running 0 3h32m

predixy-redis-cluster-trump-599bc6f66b-76kvc 1/1 Running 0 156m

predixy-redis-cluster-trump-599bc6f66b-msk5h 1/1 Running 0 157m

redis-cluster-trump-0-0 2/2 Running 0 163m

redis-cluster-trump-0-1 2/2 Running 0 162m

redis-cluster-trump-1-0 2/2 Running 0 163m

redis-cluster-trump-1-1 2/2 Running 0 162m

redis-cluster-trump-2-0 2/2 Running 0 163m

redis-cluster-trump-2-1 2/2 Running 0 162m

redis-cluster-trump-3-0 2/2 Running 0 14m

redis-cluster-trump-3-1 2/2 Running 0 14m

通过SVC暴露的端口进行访问,此处略。登录后即可查看集群,读者也可以尝试删除原本的集群,通过图形化界面再次创建

[root@master-01 deploy]# kubectl get svc -n redis

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

manager NodePort 10.96.116.126 <none> 8088:32522/TCP 37s

predixy-redis-cluster-trump NodePort 10.96.99.146 <none> 6379:32211/TCP 161m

redis-cluster-trump ClusterIP 10.96.243.75 <none> 6379/TCP 161mHelm

Helm 是 Kubernetes 的包管理工具,通常被称为 Kubernetes 的“包管理器”。它帮助我们定义、安装和管理 Kubernetes 应用程序,类似于 Linux 系统的 apt、yum,可以在 Kubernetes 集群中方便地进行应用的打包、配置和管理。

Helm 的核心概念

- Chart:Chart 是 Helm 的基本单位,表示一个应用程序的“配方”。它包含了 Kubernetes 集群所需的所有资源的定义文件(YAML 文件),用于部署一个特定的应用。例如,Redis、MySQL 等应用可以有自己的 Chart。

- Release:每次在集群中安装一个 Chart 时会创建一个“Release”,可以理解为 Chart 在集群中的一个实例。不同的 Release 可以来自同一个 Chart,每个 Release 都有自己的配置。

- Repository:Helm Chart 可以托管在一个 Repository 中(类似于代码仓库),用户可以从公共或私有的 Helm 仓库中拉取和安装 Chart。

安装Helm,安装的压缩包和未来使用的charts在以下地址可以进行下载

Helm客户端安装:https://helm.sh/docs/intro/install/

Helm Charts仓库: https://artifacthub.io/上传Helm压缩包到master-01节点

[root@master-01 ~]# ls | grep helm

helm-v3.16.2-linux-amd64.tar.gz解压Helm安装包,并将可执行文件移动到/usr/local/bin/目录下

[root@master-01 ~]# tar -zxvf helm-v3.16.2-linux-amd64.tar.gz

linux-amd64/

linux-amd64/LICENSE

linux-amd64/helm

linux-amd64/README.md

[root@master-01 ~]# mv linux-amd64/helm /usr/local/bin/helmHelm添加Zookeeper仓库(读者可以通过Helm官网上方的导航栏中的charts按钮寻找其他的Zookeeper仓库)

[root@master-01 ~]# helm repo add bitnami https://charts.bitnami.com/bitnami

"bitnami" has been added to your repositories安装最新版本的Zookeeper仓库(读者可以使用--version参数指定版本)

[root@master-01 ~]# helm install my-zookeeper bitnami/zookeeper

NAME: my-zookeeper

LAST DEPLOYED: Mon Nov 11 21:22:00 2024

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: zookeeper

CHART VERSION: 13.6.0

APP VERSION: 3.9.3

** Please be patient while the chart is being deployed **

ZooKeeper can be accessed via port 2181 on the following DNS name from within your cluster:

my-zookeeper.default.svc.cluster.local

To connect to your ZooKeeper server run the following commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=zookeeper,app.kubernetes.io/instance=my-zookeeper,app.kubernetes.io/component=zookeeper" -o jsonpath="{.items[0].metadata.name}")

kubectl exec -it $POD_NAME -- zkCli.sh

To connect to your ZooKeeper server from outside the cluster execute the following commands:

kubectl port-forward --namespace default svc/my-zookeeper 2181:2181 &

zkCli.sh 127.0.0.1:2181

WARNING: There are "resources" sections in the chart not set. Using "resourcesPreset" is not recommended for production. For production installations, please set the following values according to your workload needs:

- resources

- tls.resources

+info https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/查找其他版本

[root@master-01 ~]# helm search repo bitnami/zookeeper -l

NAME CHART VERSION APP VERSION DESCRIPTION

bitnami/zookeeper 13.6.0 3.9.3 Apache ZooKeeper provides a reliable, centraliz...

bitnami/zookeeper 13.5.1 3.9.3 Apache ZooKeeper provides a reliable, centraliz...

bitnami/zookeeper 13.5.0 3.9.2 Apache ZooKeeper provides a reliable, centraliz...

...省略部分输出...Zookeeper的容器是无法启动的,因为强制要求持久化存储。此处就不再演示

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 62s default-scheduler 0/5 nodes are available: pod has unbound immediate PersistentVolumeClaims. preemption: 0/5 nodes are available: 5 Preemption is not helpful for scheduling.Helm安装Kafka

Helm基础命令

基础命令:

查询一个包:helm search

下载一个包:helm pull

创建一个包:helm create

安装一个包:helm install

查看:helm list

查看安装参数:helm get values

更新:helm upgrade

删除:helm delete新版Kafka可以不依赖Zookeeper。创建public-service命名空间

[root@master-01 ~]# kubectl create ns public-service

namespace/public-service created不添加仓库,修改对应参数创建Kafka

[root@master-01 ~]# helm install kafka-kraft bitnami/kafka \

> --set kraft.enabled=true \

> --set zookeeper.enabled=false \

> --set controller.replicaCount=3 \

> --set controller.persistence.enabled=false \

> --set broker.persistence.enabled=false \

> --set listeners.client.protocol=PLAINTEXT \

> --set listeners.controller.protocol=PLAINTEXT \

> --set listeners.interbroker.protocol=PLAINTEXT \

> --set listeners.external.protocol=PLAINTEXT -n public-service

NAME: kafka-kraft

LAST DEPLOYED: Mon Nov 11 21:52:40 2024

NAMESPACE: public-service

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: kafka

CHART VERSION: 30.1.8

APP VERSION: 3.8.1

** Please be patient while the chart is being deployed **

Kafka can be accessed by consumers via port 9092 on the following DNS name from within your cluster:

kafka-kraft.public-service.svc.cluster.local

Each Kafka broker can be accessed by producers via port 9092 on the following DNS name(s) from within your cluster:

kafka-kraft-controller-0.kafka-kraft-controller-headless.public-service.svc.cluster.local:9092

kafka-kraft-controller-1.kafka-kraft-controller-headless.public-service.svc.cluster.local:9092

kafka-kraft-controller-2.kafka-kraft-controller-headless.public-service.svc.cluster.local:9092

To create a pod that you can use as a Kafka client run the following commands:

kubectl run kafka-kraft-client --restart='Never' --image docker.io/bitnami/kafka:3.8.1-debian-12-r0 --namespace public-service --command -- sleep infinity

kubectl exec --tty -i kafka-kraft-client --namespace public-service -- bash

PRODUCER:

kafka-console-producer.sh \

--bootstrap-server kafka-kraft.public-service.svc.cluster.local:9092 \

--topic test

CONSUMER:

kafka-console-consumer.sh \

--bootstrap-server kafka-kraft.public-service.svc.cluster.local:9092 \

--topic test \

--from-beginning

WARNING: There are "resources" sections in the chart not set. Using "resourcesPreset" is not recommended for production. For production installations, please set the following values according to your workload needs:

- controller.resources

+info https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/查看创建的SVC资源

[root@master-01 ~]# kubectl get svc -n public-service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kafka-kraft ClusterIP 10.96.234.88 <none> 9092/TCP 6m47s

kafka-kraft-controller-headless ClusterIP None <none> 9094/TCP,9092/TCP,9093/TCP 6m47s查看创建的Pod资源

[root@master-01 ~]# kubectl get pods -n public-service

NAME READY STATUS RESTARTS AGE

kafka-kraft-controller-0 1/1 Running 0 76s

kafka-kraft-controller-1 1/1 Running 0 2m38s

kafka-kraft-controller-2 1/1 Running 0 2m38s在master-01上创建Kafka容器客户端,复制master-01终端窗口,两个终端同时进入该容器,模拟生产者和消费者

[root@master-01 ~]# kubectl run kafka-kraft-client --restart='Never' --image docker.io/bitnami/kafka:3.8.1-debian-12-r0 --namespace public-service --command -- sleep infinity

pod/kafka-kraft-client created

[root@master-01 ~]# kubectl exec --tty -i kafka-kraft-client --namespace public-service -- bash

I have no name!@kafka-kraft-client:/$生产者终端执行以下命令,出现箭头后,输入任意字符

I have no name!@kafka-kraft-client:/$ kafka-console-producer.sh \

--bootstrap-server kafka-kraft.public-service.svc.cluster.local:9092 \

--topic test

>i am a boy

[2024-11-11 14:06:22,055] WARN [Producer clientId=console-producer] Error while fetching metadata with correlation id 6 : {test=UNKNOWN_TOPIC_OR_PARTITION} (org.apache.kafka.clients.NetworkClient)

[2024-11-11 14:06:22,196] WARN [Producer clientId=console-producer] Error while fetching metadata with correlation id 7 : {test=UNKNOWN_TOPIC_OR_PARTITION} (org.apache.kafka.clients.NetworkClient)

[2024-11-11 14:06:22,431] WARN [Producer clientId=console-producer] Error while fetching metadata with correlation id 8 : {test=UNKNOWN_TOPIC_OR_PARTITION} (org.apache.kafka.clients.NetworkClient)

>i will learn about K8S

>消费者终端执行以下命令,会获取生产者生产的信息,输出到前台界面

[root@master-01 ~]# kubectl exec --tty -i kafka-kraft-client --namespace public-service -- bash

I have no name!@kafka-kraft-client:/$ kafka-console-consumer.sh \

--bootstrap-server kafka-kraft.public-service.svc.cluster.local:9092 \

--topic test \

--from-beginning

i am a boy

i will learn about K8S查看Helm安装Kafka时,使用的Values(注意:直接使用install命令安装,无法查看)

[root@master-01 ~]# helm list -n public-service

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

kafka-kraft public-service 1 2024-11-11 21:52:40.22452716 +0800 CST deployed kafka-30.1.8 3.8.1

You have new mail in /var/spool/mail/root

[root@master-01 ~]# helm get values -n public-service kafka-kraft

USER-SUPPLIED VALUES:

broker:

persistence:

enabled: false

controller:

persistence:

enabled: false

replicaCount: 3

kraft:

enabled: true

listeners:

client:

protocol: PLAINTEXT

controller:

protocol: PLAINTEXT

external:

protocol: PLAINTEXT

interbroker:

protocol: PLAINTEXT

zookeeper:

enabled: falseHelm目录层级

创建一个Chart:helm create helm-test

├── charts # 依赖文件

├── Chart.yaml # 当前chart的基本信息

apiVersion:Chart的apiVersion,目前默认都是v2

name:Chart的名称

type:图表的类型[可选]

version:Chart自己的版本号

appVersion:Chart内应用的版本号[可选]

description:Chart描述信息[可选]

├── templates # 模板位置

│ ├── deployment.yaml

│ ├── _helpers.tpl # 自定义的模板或者函数

│ ├── ingress.yaml

│ ├── NOTES.txt #Chart安装完毕后的提醒信息

│ ├── serviceaccount.yaml

│ ├── service.yaml

│ └── tests # 测试文件

│ └── test-connection.yaml

└── values.yaml #配置全局变量或者一些参数Helm内置变量

Release.Name: 实例的名称,helm install指定的名字

Release.Namespace: 应用实例的命名空间

Release.IsUpgrade: 如果当前对实例的操作是更新或者回

滚,这个变量的值就会被置为true

Release.IsInstall: 如果当前对实例的操作是安装,则这边

变量被置为true

Release.Revision: 此次修订的版本号,从1开始,每次升

级回滚都会增加1

Chart: Chart.yaml文件中的内容,可以使用Chart.Version表

示应用版本,Chart.Name表示Chart的名称创建Helm项目,此时会创建一个与Helm项目同名的文件夹。可以观察到文件夹内的结构与上面示例的结构相同

[root@master-01 ~]# helm create helm-v1

Creating helm-v1

[root@master-01 ~]# tree helm-v1/

helm-v1/

├── charts

├── Chart.yaml

├── templates

│ ├── deployment.yaml

│ ├── _helpers.tpl

│ ├── hpa.yaml

│ ├── ingress.yaml

│ ├── NOTES.txt

│ ├── serviceaccount.yaml

│ ├── service.yaml

│ └── tests

│ └── test-connection.yaml

└── values.yaml

3 directories, 10 files使用Helm命令生成资源,但是不真实创建,添加-dry-run参数

[root@master-01 ~]# cd helm-v1/

[root@master-01 helm-v1]# helm install helm-v1 . --dry-run

NAME: helm-v1

LAST DEPLOYED: Wed Nov 13 23:15:36 2024

NAMESPACE: default

STATUS: pending-install

REVISION: 1

HOOKS:

---

# Source: helm-v1/templates/tests/test-connection.yaml

apiVersion: v1

kind: Pod

metadata:

name: "helm-v1-test-connection"

labels:

helm.sh/chart: helm-v1-0.1.0

app.kubernetes.io/name: helm-v1

app.kubernetes.io/instance: helm-v1

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

annotations:

"helm.sh/hook": test

spec:

containers:

- name: wget

image: busybox

command: ['wget']

args: ['helm-v1:80']

restartPolicy: Never

MANIFEST:

---

# Source: helm-v1/templates/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: helm-v1

labels:

helm.sh/chart: helm-v1-0.1.0

app.kubernetes.io/name: helm-v1

app.kubernetes.io/instance: helm-v1

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

automountServiceAccountToken: true

---

# Source: helm-v1/templates/service.yaml

apiVersion: v1

kind: Service

metadata:

name: helm-v1

labels:

helm.sh/chart: helm-v1-0.1.0

app.kubernetes.io/name: helm-v1

app.kubernetes.io/instance: helm-v1

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

spec:

type: ClusterIP

ports:

- port: 80

targetPort: http

protocol: TCP

name: http

selector:

app.kubernetes.io/name: helm-v1

app.kubernetes.io/instance: helm-v1

---

# Source: helm-v1/templates/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: helm-v1

labels:

helm.sh/chart: helm-v1-0.1.0

app.kubernetes.io/name: helm-v1

app.kubernetes.io/instance: helm-v1

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: helm-v1

app.kubernetes.io/instance: helm-v1

template:

metadata:

labels:

helm.sh/chart: helm-v1-0.1.0

app.kubernetes.io/name: helm-v1

app.kubernetes.io/instance: helm-v1

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

spec:

serviceAccountName: helm-v1

securityContext:

{}

containers:

- name: helm-v1

securityContext:

{}

image: "nginx:1.16.0"

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

protocol: TCP

livenessProbe:

httpGet:

path: /

port: http

readinessProbe:

httpGet:

path: /

port: http

resources:

{}

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=helm-v1,app.kubernetes.io/instance=helm-v1" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace default $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace default port-forward $POD_NAME 8080:$CONTAINER_PORT观察Deployment资源生成的配置文件,分析.metadate.labels字段,可以观察到有4个标签

apiVersion: apps/v1

kind: Deployment

metadata:

name: helm-v1

labels:

helm.sh/chart: helm-v1-0.1.0

app.kubernetes.io/name: helm-v1

app.kubernetes.io/instance: helm-v1

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm切换到templates目录下,查看deployment.yaml文件对标签的定义方式。此处通过include方法引入了自定义的模块或函数,名称为helm-v1.labels,此模板或方法定义在_helpers.tpl文件内。由于yaml文件对缩进敏感,所以使用-符号删掉前面的空格,再通过nindent函数添加4个空格

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ include "helm-v1.fullname" . }}

labels:

{{- include "helm-v1.labels" . | nindent 4 }}查看_helpers.tpl文件,观察helm-v1.labels模板定义方法。通过define定义了helm-v1.labels模板,第1个key为helm.sh/chart,value为调用helm-v1.chart模板

{{/*

Common labels

*/}}

{{- define "helm-v1.labels" -}}

helm.sh/chart: {{ include "helm-v1.chart" . }}

{{ include "helm-v1.selectorLabels" . }}

{{- if .Chart.AppVersion }}

app.kubernetes.io/version: {{ .Chart.AppVersion | quote }}

{{- end }}

app.kubernetes.io/managed-by: {{ .Release.Service }}

{{- end }}在同一个文件内,观察helm-v1.chart模板的定义方法。通过define定义了helm-v1.chart模板,使用printf函数打印,打印格式为x-x,调用Chart.yaml文件内定义name和version字段,组合起来应该为helm-v1-0.1.0,并且将+替换为_,截断到6个字符,并且将字符最后的-符号删除

{{/*

Create chart name and version as used by the chart label.

*/}}

{{- define "helm-v1.chart" -}}

{{- printf "%s-%s" .Chart.Name .Chart.Version | replace "+" "_" | trunc 63 | trimSuffix "-" }}

{{- end }}Chart.yaml文件内容如下

[root@master-01 helm-v1]# cat Chart.yaml

apiVersion: v2

name: helm-v1

description: A Helm chart for Kubernetes

# A chart can be either an 'application' or a 'library' chart.

#

# Application charts are a collection of templates that can be packaged into versioned archives

# to be deployed.

#

# Library charts provide useful utilities or functions for the chart developer. They're included as

# a dependency of application charts to inject those utilities and functions into the rendering

# pipeline. Library charts do not define any templates and therefore cannot be deployed.

type: application

# This is the chart version. This version number should be incremented each time you make changes

# to the chart and its templates, including the app version.

# Versions are expected to follow Semantic Versioning (https://semver.org/)

version: 0.1.0

# This is the version number of the application being deployed. This version number should be

# incremented each time you make changes to the application. Versions are not expected to

# follow Semantic Versioning. They should reflect the version the application is using.

# It is recommended to use it with quotes.

appVersion: "1.16.0"_helpers.tpl文件第2个KV为调用helm-v1.selectorLabels模板里面的内容。模板内定义了两个Key,第一个Values为helm-v1.name模板的内容,第二个Values为Helm内置变量Name(创建Helm时,用户自定义的名称)

{{/*

Selector labels

*/}}

{{- define "helm-v1.selectorLabels" -}}

app.kubernetes.io/name: {{ include "helm-v1.name" . }}

app.kubernetes.io/instance: {{ .Release.Name }}

{{- end }}helm-v1.name模板的内容包括了Chart.yaml文件内定义的name字段(helm-v1)和values.yaml文件内的nameOverride(空)字段

{{/*

Expand the name of the chart.

*/}}

{{- define "helm-v1.name" -}}

{{- default .Chart.Name .Values.nameOverride | trunc 63 | trimSuffix "-" }}

{{- end }}第3、4个KV需要经过if条件判断,如果Chart.yaml文件内AppVersion字段存在,则执行if内部的模板代码,输出Key为app.kubernetes.io/version,Values为Chart.yaml文件内定义的AppVersion字段的值,并且调用quote方法(在使用双引号包围值),输出第2个Key为app.kubernetes.io/managed-by,Values为引用Helm的内置变量Service

Helm流程控制

在定义模板文件时,Helm也有 if/else判断,with(切换目录)和rang(类似于for)。读者可以参考官方文档和下载bitinami的rabbitmq来观察用法,此处不再赘述。有疑问的读者可以留下评论或者联系作者。官网内也有很多需要读者注意的地方,请读者仔细阅读。

https://helm.sh/docs/chart_template_guide/control_structures/