K8S云原生存储

- Kubernetes

- 2024-10-31

- 899热度

- 3评论

K8S云原生存储

Kubernetes(K8s)中的云原生存储是指在 Kubernetes 环境中为容器化应用提供的动态、弹性、可扩展的存储解决方案。云原生存储的设计理念与 Kubernetes 的架构相适应,使存储管理变得与应用的生命周期和部署流程紧密集成。

主要特点

-

动态存储供给:

- Kubernetes 支持动态创建持久卷(Persistent Volume,PV)和持久卷声明(Persistent Volume Claim,PVC),使得应用在需要时能够自动请求存储。

-

持久性:

- 云原生存储解决方案通常支持数据的持久化,即使 Pod 重新调度或重启,数据仍然可用。

-

弹性和扩展性:

- 可以根据应用需求自动扩展存储容量,确保性能和可用性。

-

多种存储后端:

- 支持多种存储类型,包括块存储、文件存储和对象存储。常见的存储解决方案包括 Ceph、Rook、OpenEBS、Portworx 和 StorageOS。

-

高可用性:

- 支持数据的冗余和备份,确保在故障发生时能够快速恢复。

云原生存储的组成

-

Persistent Volumes (PV):

- 是集群中的存储资源,管理员预先创建的,存储的具体实现(如 NFS、iSCSI、云存储等)。

-

Persistent Volume Claims (PVC):

- 用户申请存储的请求,定义所需的存储大小和访问模式。

-

Storage Classes:

- 定义不同类型的存储解决方案和策略,用户可以根据需要选择不同的存储级别。

-

动态供给:

- 通过 StorageClass,Kubernetes 可以根据 PVC 自动创建 PV,简化存储管理。

常见的云原生存储解决方案

- Rook:自动化存储管理,支持多种后端(如 Ceph)。

- OpenEBS:基于容器的存储,专注于容器化应用的持久存储。

- Portworx:提供高可用性和多云支持的容器存储解决方案。

- Ceph:分布式存储系统,提供块、文件和对象存储服务。

应用场景

- 数据库:需要持久性和高可用性的应用。

- 大数据处理:高性能存储需求。

- 微服务架构:灵活管理各个服务的存储需求。

云原生存储在 Kubernetes 中的应用使得数据管理变得更加简单、高效,并与现代应用的需求相匹配,为 DevOps 和 CI/CD 流程提供了良好的支持。

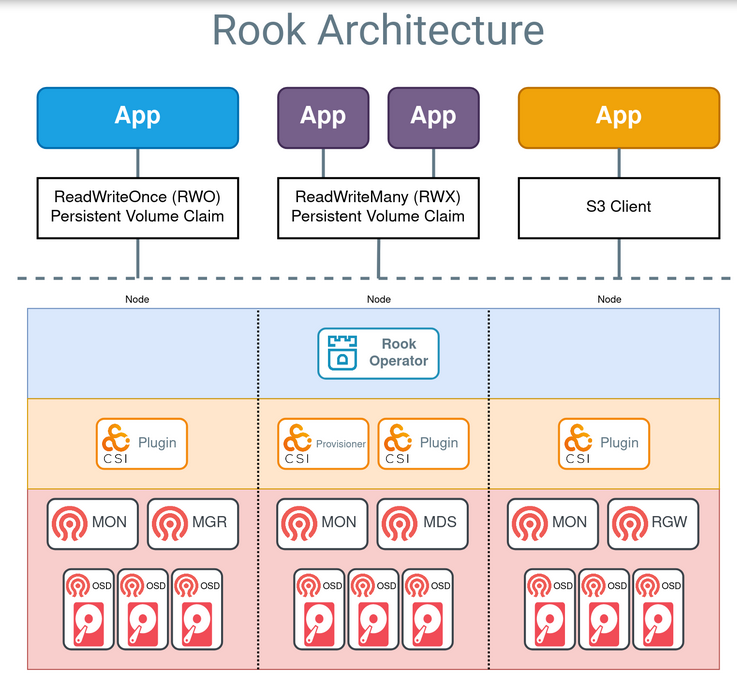

Rook

Rook 是一个开源的云原生存储管理解决方案,专为 Kubernetes 设计。它旨在通过自动化存储管理,使存储系统的部署、配置和维护变得更加简单。

以下是 Rook 的一些关键特性和概念:

1.自动化:Rook 能够自动化部署和管理存储集群,包括监控、扩展和故障恢复等任务,减少人工干预的需求。

2.原生集成:Rook 与 Kubernetes 原生集成,利用 Kubernetes 的 API 和 CRD(Custom Resource Definitions)来管理存储资源。

3.支持多种存储后端:Rook 支持多个存储后端,最著名的是 Ceph,这是一种分布式存储解决方案。Rook 还在不断扩展支持其他存储系统。

4.动态存储供给:通过定义 StorageClass,用户可以动态创建 Persistent Volume(PV)和 Persistent Volume Claim(PVC),简化存储的请求和管理。

5.高可用性和容错:Rook 通过数据复制和集群模式确保数据的高可用性,能够在节点故障时快速恢复。

关键组件

1.Rook Operator

功能:负责整个存储集群的管理和运维,包括集群的创建、配置、扩展和故障恢复。

特性:通过监控 Kubernetes 中的 CRD,自动执行必要的操作(如创建 OSD、MON 等)。提供简单的 API,使得用户可以通过 Kubernetes 资源定义存储集群。

2.存储集群(如 Ceph)

功能:实际的存储后端,提供块存储、对象存储和文件系统存储。

特性:Rook 可以部署不同类型的存储集群,最常用的是 Ceph。提供高可用性、可扩展性和数据冗余等特性。

3.CRD(Custom Resource Definitions)

功能:定义 Kubernetes 中的自定义资源,用于描述 Rook 管理的存储集群及其配置。

特性:CRD 包括 Cluster、Pool、ObjectStore、Filesystem 等资源,用户通过这些资源定义存储集群的配置和属性。通过声明式配置,用户可以轻松管理和更新存储集群。

4.监控和日志

功能:监控存储集群的健康状况,提供性能指标和日志记录。

特性:集成 Prometheus 和 Grafana 等工具进行集群监控。提供告警和日志记录功能,便于故障排查和性能分析。

5.StorageClasses

功能:定义不同类型存储的策略和参数,供 Kubernetes 中的 PVC(Persistent Volume Claims)使用。

特性:用户可以通过定义 StorageClass 来指定使用 Rook 部署的存储集群的配置,如复制因子、存储池等。

部署Rook

| 环境 | 参数 |

|---|---|

| 集群数量 | 3master 2node |

| CPU | 2核 |

| Memory | 5G |

由于节点数量限制,避免Ceph集群的组件部署失败,所以将master节点的污点删除,允许容器进行调度

[root@master-01 ~]# kubectl taint node master-01 node-role.kubernetes.io/control-plane-

node/master-01 untainted

[root@master-01 ~]# kubectl taint node master-02 node-role.kubernetes.io/control-plane-

node/master-02 untainted

[root@master-01 ~]# kubectl taint node master-03 node-role.kubernetes.io/control-plane:NoSchedule-

node/master-03 untainted

[root@master-01 ~]# kubectl describe nodes | grep Taints

Taints: <none>

Taints: <none>

Taints: <none>

Taints: <none>

Taints: <none>确保所有节点时间正确

[root@master-01 ~]# ntpdate time2.aliyun.com

27 Oct 22:14:04 ntpdate[108096]: adjust time server 203.107.6.88 offset -0.108387 sec

[root@master-01 ~]# date

Sun Oct 27 22:12:42 CST 2024在master-01、node-01、node-02节点添加一块磁盘,大小为100G(此处以node-01为例)

[root@node-01 ~]# lsblk -f

NAME FSTYPE LABEL UUID MOUNTPOINT

sdb通过Git命令拉取对应的yaml文件到本地(提示,如果因为网络问题不能拉取,请使用nslookup解析以下两个域名,并添加到本地的/etc/hosts文件内)

[root@master-01 ~]# git clone --single-branch --branch v1.14.11 https://github.com/rook/rook.git

Cloning into 'rook'...

remote: Enumerating objects: 99413, done.

remote: Counting objects: 100% (532/532), done.

remote: Compressing objects: 100% (297/297), done.

remote: Total 99413 (delta 413), reused 266 (delta 235), pack-reused 98881 (from 1)

Receiving objects: 100% (99413/99413), 52.80 MiB | 7.07 MiB/s, done.

Resolving deltas: 100% (69737/69737), done.

Note: checking out '3b1ab605a88b976127252e606d583599b7732add'.[root@master-01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.132.169 master-01

192.168.132.170 master-02

192.168.132.171 master-03

# 如果不是高可用集群,该IP为Master01的IP

192.168.132.236 master-lb

192.168.132.172 node-01

192.168.132.173 node-02

#github

20.205.243.166 https://github.com

20.205.243.166 http://github.com

211.104.160.39 https://github.global.ssl.fastly.net

211.104.160.39 http://github.global.ssl.fastly.net切换目录,并使用kubectl命令创建对应资源

[root@master-01 ~]# cd rook/deploy/examples

You have new mail in /var/spool/mail/root

[root@master-01 examples]# kubectl create -f crds.yaml -f common.yaml

customresourcedefinition.apiextensions.k8s.io/cephblockpoolradosnamespaces.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephblockpools.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephbucketnotifications.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephbuckettopics.ceph.rook.io created

...省略部分输出...修改operator.yaml文件镜像的地址和启动发现守护进程来监视集群中节点上的原始存储设备

[root@master-01 examples]# cat operator.yaml | grep caijxlinux

image: registry.cn-guangzhou.aliyuncs.com/caijxlinux/ceph:v1.14.11

ROOK_CSI_CEPH_IMAGE: "quay.io/cephcsi/cephcsi:v3.11.0"

ROOK_CSI_REGISTRAR_IMAGE: "registry.cn-guangzhou.aliyuncs.com/caijxlinux/csi-node-driver-registrar:v2.10.1"

ROOK_CSI_RESIZER_IMAGE: "registry.cn-guangzhou.aliyuncs.com/caijxlinux/csi-resizer:v1.10.1"

ROOK_CSI_PROVISIONER_IMAGE: "registry.cn-guangzhou.aliyuncs.com/caijxlinux/csi-provisioner:v4.0.1"

ROOK_CSI_SNAPSHOTTER_IMAGE: "registry.cn-guangzhou.aliyuncs.com/caijxlinux/csi-snapshotter:v7.0.2"

ROOK_CSI_ATTACHER_IMAGE: "registry.cn-guangzhou.aliyuncs.com/caijxlinux/csi-attacher:v4.5.1"[root@master-01 examples]# cat operator.yaml | grep DISCOVERY

ROOK_ENABLE_DISCOVERY_DAEMON: "true"创建资源并查看资源状态(提示:如果读者在创建的过程当中发现仍然有其他镜像无法拉取,可以将其他镜像的地址修改为registry.cn-guangzhou.aliyuncs.com。作者已将所有的镜像克隆到国内。克隆方法见文末。)

[root@master-01 examples]# kubectl create -f operator.yaml

configmap/rook-ceph-operator-config created

deployment.apps/rook-ceph-operator created需要operator 容器和 discover 容器为Running状态,才能进行Ceph集群的搭建

[root@master-01 examples]# kubectl get pods -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-f7f45cfdc-f4t9r 1/1 Running 0 9m30s

rook-discover-26p8h 1/1 Running 0 6m48s

rook-discover-5jd8x 1/1 Running 0 6m48s

rook-discover-js6nw 1/1 Running 0 6m48s

rook-discover-r598t 1/1 Running 0 6m48s

rook-discover-t84jk 1/1 Running 0 6m48s修改cluster.yaml文件

登录WebUI界面使用http协议

ssl: false修改storage配置,不使用所有节点,不使用所有存储设备搭建集群

storage: # cluster level storage configuration and selection

useAllNodes: false

useAllDevices: false配置加入Ceph集群的节点信息,注意:name字段的值为节点kubernetes.io/hostname标签的值

storage: # cluster level storage configuration and selection

useAllNodes: false

useAllDevices: false

#deviceFilter:

config:

# crushRoot: "custom-root" # specify a non-default root label for the CRUSH map

# metadataDevice: "md0" # specify a non-rotational storage so ceph-volume will use it as block db device of bluestore.

# databaseSizeMB: "1024" # uncomment if the disks are smaller than 100 GB

# osdsPerDevice: "1" # this value can be overridden at the node or device level

# encryptedDevice: "true" # the default value for this option is "false"

# deviceClass: "myclass" # specify a device class for OSDs in the cluster

allowDeviceClassUpdate: false # whether to allow changing the device class of an OSD after it is created

allowOsdCrushWeightUpdate: false # whether to allow resizing the OSD crush weight after osd pvc is increased

# Individual nodes and their config can be specified as well, but 'useAllNodes' above must be set to false. Then, only the named

# nodes below will be used as storage resources. Each node's 'name' field should match their 'kubernetes.io/hostname' label.

nodes:

- name: "master-03"

devices: # specific devices to use for storage can be specified for each node

- name: "sdb"

- name: "node-01"

devices: # specific devices to use for storage can be specified for each node

- name: "sdb"

- name: "node-02"

devices: # specific devices to use for storage can be specified for each node

- name: "sdb"创建资源并查看资源状态,等待所有的Pod都处于Running状态(注意:Pod内镜像无法拉取请通过edit命令进行修改)

[root@master-01 examples]# kubectl create -f cluster.yaml

cephcluster.ceph.rook.io/rook-ceph created

[root@master-01 examples]# kubectl get pods -n rook-ceph

NAME READY STATUS RESTARTS AGE

csi-cephfsplugin-5wbmt 3/3 Running 1 (39m ago) 40m

csi-cephfsplugin-fc6h6 3/3 Running 1 (39m ago) 40m

csi-cephfsplugin-lq55s 3/3 Running 1 (39m ago) 40m

csi-cephfsplugin-ltqtx 3/3 Running 0 40m

csi-cephfsplugin-ph45n 3/3 Running 1 (39m ago) 40m

csi-cephfsplugin-provisioner-db78bfb7f-w8s4m 6/6 Running 1 (36m ago) 40m

csi-cephfsplugin-provisioner-db78bfb7f-wc7vd 6/6 Running 1 (36m ago) 40m

csi-rbdplugin-2tznk 3/3 Running 1 (39m ago) 40m

csi-rbdplugin-6d55l 3/3 Running 1 (39m ago) 40m

csi-rbdplugin-8gmgh 3/3 Running 1 (39m ago) 40m

csi-rbdplugin-f9558 3/3 Running 0 40m

csi-rbdplugin-provisioner-787bcd9949-gmvkg 6/6 Running 3 (35m ago) 40m

csi-rbdplugin-provisioner-787bcd9949-rsvxh 6/6 Running 1 (38m ago) 40m

csi-rbdplugin-v6zt6 3/3 Running 1 (39m ago) 40m

rook-ceph-crashcollector-master-02-cc6788bd9-fhrwc 1/1 Running 0 41m

rook-ceph-crashcollector-master-03-74674674-snz8c 1/1 Running 0 40m

rook-ceph-crashcollector-node-01-7d9c88bff4-vwgwh 1/1 Running 0 40m

rook-ceph-crashcollector-node-02-745f798b4c-b8bbx 1/1 Running 0 40m

rook-ceph-exporter-master-02-fc94cc8c8-kx6wc 1/1 Running 0 41m

rook-ceph-exporter-master-03-79596bb565-748vg 1/1 Running 0 40m

rook-ceph-exporter-node-01-c7d54f7b9-b6kqg 1/1 Running 0 40m

rook-ceph-exporter-node-02-7c965c777b-d27dc 1/1 Running 0 40m

rook-ceph-mgr-a-756b6b9bf6-5x99k 3/3 Running 0 41m

rook-ceph-mgr-b-7f87996d55-4pf8m 3/3 Running 0 41m

rook-ceph-mon-a-94f6456d-68tjx 2/2 Running 0 42m

rook-ceph-mon-b-697cc8b754-qdz9m 2/2 Running 0 41m

rook-ceph-mon-c-779cd6975-5nbd9 2/2 Running 0 41m

rook-ceph-operator-f7f45cfdc-7td8g 1/1 Running 0 50m

rook-ceph-osd-0-64bf94fdd4-pmxbc 2/2 Running 0 40m

rook-ceph-osd-1-5fbb8df94-vkztf 2/2 Running 0 40m

rook-ceph-osd-2-9d6d8b56f-vq8qv 2/2 Running 0 40m

rook-ceph-osd-prepare-master-03-hwls8 0/1 Completed 0 41m

rook-ceph-osd-prepare-node-01-fd89c 0/1 Completed 0 41m

rook-ceph-osd-prepare-node-02-xj9b5 0/1 Completed 0 41m

rook-discover-594xg 1/1 Running 0 48m

rook-discover-j8vbt 1/1 Running 0 48m

rook-discover-kfpmw 1/1 Running 0 48m

rook-discover-p7gsq 1/1 Running 0 48m

rook-discover-z48k4 1/1 Running 0 48m通过Rook官方提供的测试工具测试集群状态是否可用,如果没有提示HEALTHY_OK,需要检查集群状态。(注意:不能直接delete -f删除资源会报错,清理集群见文末)

[root@master-01 examples]# kubectl create -f toolbox.yaml登录toolbox,执行ceph status命令和ceph osd status命令查看集群状态

[root@master-01 examples]# kubectl -n rook-ceph exec -it deploy/rook-ceph-tools -- bash

bash-5.1$ ceph status

cluster:

id: f98fd3d8-905a-468c-bdeb-ef67481258b4

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 42m)

mgr: a(active, since 40m), standbys: b

osd: 3 osds: 3 up (since 41m), 3 in (since 42m)

data:

pools: 1 pools, 1 pgs

objects: 2 objects, 449 KiB

usage: 80 MiB used, 300 GiB / 300 GiB avail

pgs: 1 active+clean

bash-5.1$ ceph osd status

ID HOST USED AVAIL WR OPS WR DATA RD OPS RD DATA STATE

0 node-01 26.8M 99.9G 0 0 0 0 exists,up

1 node-02 26.8M 99.9G 0 0 0 0 exists,up

2 master-03 26.8M 99.9G 0 0 0 0 exists,up通过kubectl命令查看集群状态

[root@master-01 examples]# kubectl get cephclusters.ceph.rook.io -n rook-ceph

NAME DATADIRHOSTPATH MONCOUNT AGE PHASE MESSAGE HEALTH EXTERNAL FSID

rook-ceph /var/lib/rook 3 66m Ready Cluster created successfully HEALTH_OK f98fd3d8-905a-468c-bdeb-ef67481258b4创建ceph snapshotter

[root@master-01 snapshotter]# kubectl create -f . -n kube-system

serviceaccount/snapshot-controller created

clusterrole.rbac.authorization.k8s.io/snapshot-controller-runner created

clusterrolebinding.rbac.authorization.k8s.io/snapshot-controller-role created

role.rbac.authorization.k8s.io/snapshot-controller-leaderelection created

rolebinding.rbac.authorization.k8s.io/snapshot-controller-leaderelection created

statefulset.apps/snapshot-controller created

service/snapshot-controller created

customresourcedefinition.apiextensions.k8s.io/volumesnapshotclasses.snapshot.storage.k8s.io created

customresourcedefinition.apiextensions.k8s.io/volumesnapshotcontents.snapshot.storage.k8s.io created

customresourcedefinition.apiextensions.k8s.io/volumesnapshots.snapshot.storage.k8s.io created[root@master-01 snapshotter]# kubectl get pods -n kube-system -l app=snapshot-controller

NAME READY STATUS RESTARTS AGE

snapshot-controller-0 1/1 Running 0 51s配置Ceph dashboard,创建NodePort类型的SVC,暴露服务

[root@master-01 ~]# vim dashboard-np.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: rook-ceph-mgr

ceph_daemon_id: a

rook_cluster: rook-ceph

name: rook-ceph-mgr-dashboard-np

namespace: rook-ceph

spec:

ports:

- name: http-dashboard

port: 7000

protocol: TCP

targetPort: 7000

selector:

app: rook-ceph-mgr

ceph_daemon_id: a

rook_cluster: rook-ceph

sessionAffinity: None

type: NodePort创建资源并查看资源状态

[root@master-01 ~]# kubectl get svc -n rook-ceph

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rook-ceph-exporter ClusterIP 10.96.157.138 <none> 9926/TCP 87m

rook-ceph-mgr ClusterIP 10.96.104.44 <none> 9283/TCP 87m

rook-ceph-mgr-dashboard ClusterIP 10.96.238.8 <none> 7000/TCP 87m

rook-ceph-mgr-dashboard-np NodePort 10.96.22.121 <none> 7000:31514/TCP 10s

rook-ceph-mon-a ClusterIP 10.96.180.8 <none> 6789/TCP,3300/TCP 88m

rook-ceph-mon-b ClusterIP 10.96.216.20 <none> 6789/TCP,3300/TCP 88m

rook-ceph-mon-c ClusterIP 10.96.101.242 <none> 6789/TCP,3300/TCP 88m通过浏览器dashboard,访问地址:节点IP+端口号(HTTP协议)

默认登录账号为admin,密码存放在secret中,需要通过base64算法进行解密

[root@master-01 ~]# kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath="{['data']['password']}" | base64 --decode && echo

|#&%M^fU}Rb[iMB5qv:,

创建存储池

创建 StorageClass 和 ceph 的存储池,修改复制参数为2,降低资源消耗(生产环境不建议执行此步骤)

[root@master-01 rbd]# vim /root/rook/deploy/examples/csi/rbd/storageclass.yaml

apiVersion: ceph.rook.io/v1

kind: CephBlockPool

metadata:

name: replicapool

namespace: rook-ceph # namespace:cluster

spec:

failureDomain: host

replicated:

size: 2

......创建资源并查看资源状态

[root@master-01 rbd]# kubectl create -f /root/rook/deploy/examples/csi/rbd/storageclass.yaml

cephblockpool.ceph.rook.io/replicapool created

storageclass.storage.k8s.io/rook-ceph-block created[root@master-01 rbd]# kubectl get cephblockpool -n rook-ceph

NAME PHASE TYPE FAILUREDOMAIN AGE

replicapool Ready Replicated host 25s

[root@master-01 rbd]# kubectl get storageclasses.storage.k8s.io

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate true 38s同时在dashboard界面也可以观察到已经创建了Pools

创建PVC

使用storageClassName字段创建PVC

[root@master-01 ~]# vim auto-bound.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: auto-claim

labels:

app: caijx

spec:

storageClassName: rook-ceph-block

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi创建PVC后,可以观察到已经自动绑定了PV,并且在dashboard界面可以观察到创建了对应的images

[root@master-01 ~]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

auto-claim Bound pvc-598f57b5-0144-438a-9593-0520ae924b77 2Gi RWO rook-ceph-block <unset> 4s[root@master-01 ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

pvc-598f57b5-0144-438a-9593-0520ae924b77 2Gi RWO Delete Bound default/auto-claim rook-ceph-block <unset> 7s[root@master-01 ~]# kubectl describe pv pvc-598f57b5-0144-438a-9593-0520ae924b77

Name: pvc-598f57b5-0144-438a-9593-0520ae924b77

Labels: <none>

Annotations: pv.kubernetes.io/provisioned-by: rook-ceph.rbd.csi.ceph.com

volume.kubernetes.io/provisioner-deletion-secret-name: rook-csi-rbd-provisioner

volume.kubernetes.io/provisioner-deletion-secret-namespace: rook-ceph

Finalizers: [external-provisioner.volume.kubernetes.io/finalizer kubernetes.io/pv-protection]

StorageClass: rook-ceph-block

Status: Bound

Claim: default/auto-claim

Reclaim Policy: Delete

Access Modes: RWO

VolumeMode: Filesystem

Capacity: 2Gi

Node Affinity: <none>

Message:

Source:

Type: CSI (a Container Storage Interface (CSI) volume source)

Driver: rook-ceph.rbd.csi.ceph.com

FSType: ext4

VolumeHandle: 0001-0009-rook-ceph-0000000000000002-dd6a6cbd-192d-4862-8ac9-e9a269d6cd5c

ReadOnly: false

VolumeAttributes: clusterID=rook-ceph

imageFeatures=layering

imageFormat=2

imageName=csi-vol-dd6a6cbd-192d-4862-8ac9-e9a269d6cd5c

journalPool=replicapool

pool=replicapool

storage.kubernetes.io/csiProvisionerIdentity=1730219449657-9615-rook-ceph.rbd.csi.ceph.com

Events: <none>

配置StatefulSet volumeClaimTemplates

在 Kubernetes 的 StatefulSet 中,volumeClaimTemplates 用于定义每个 Pod 应该使用的持久化存储卷。与其他控制器(如 Deployment)不同,StatefulSet 会为每个 Pod 自动创建独立的 PersistentVolumeClaim(PVC),确保每个副本都有独立的存储。

[root@master-01 ~]# vim statfulset-sc.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

selector:

matchLabels:

app: nginx # 选择器,用于匹配 Pod 的标签

replicas: 3 # 副本数量,默认是 1

template:

metadata:

labels:

app: nginx # Pod 的标签,必须与 .spec.selector.matchLabels 匹配

spec:

terminationGracePeriodSeconds: 10 # 优雅终止的时间

containers:

- name: nginx

image: registry.cn-guangzhou.aliyuncs.com/caijxlinux/nginx:v1.15.1 # 使用的容器镜像

ports:

- containerPort: 80 # 容器暴露的端口

name: web

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html # 挂载路径

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: [ "ReadWriteOnce" ] # 存储访问模式

storageClassName: "rook-ceph-block" # 存储类

resources:

requests:

storage: 1Gi # 请求的存储大小查看资源状态,可以观察到自动为每一个Pod绑定了PV和PVC

[root@master-01 ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

pvc-6b562a74-e1be-43e8-b8ef-6e2f3b2f6f15 1Gi RWO Delete Bound default/www-web-2 rook-ceph-block <unset> 26s

pvc-bbb19c02-7a53-4653-8fa3-b80fc3060f0a 1Gi RWO Delete Bound default/www-web-1 rook-ceph-block <unset> 66s

pvc-e71c775a-034b-4965-afc7-916410d0868c 1Gi RWO Delete Bound default/www-web-0 rook-ceph-block <unset> 94s[root@master-01 ~]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

www-web-0 Bound pvc-e71c775a-034b-4965-afc7-916410d0868c 1Gi RWO rook-ceph-block <unset> 96s

www-web-1 Bound pvc-bbb19c02-7a53-4653-8fa3-b80fc3060f0a 1Gi RWO rook-ceph-block <unset> 68s

www-web-2 Bound pvc-6b562a74-e1be-43e8-b8ef-6e2f3b2f6f15 1Gi RWO rook-ceph-block <unset> 29s创建共享类型文件系统的Pool

[root@master-01 examples]# kubectl create -f /root/rook/deploy/examples/filesystem.yaml

cephfilesystem.ceph.rook.io/myfs created

cephfilesystemsubvolumegroup.ceph.rook.io/myfs-csi created登录dashboard查看创建的Pools和FileSystem

创建共享类型文件系统的 StorageClass

[root@master-01 cephfs]# kubectl create -f /root/rook/deploy/examples/csi/cephfs/storageclass.yaml

storageclass.storage.k8s.io/rook-cephfs created通过StorageClass挂载PV,可以观察到自动绑定的PV,实现了文件共享的效果,3个Pod可以互相看到挂载的内容

[root@master-01 cephfs]# vim filesystem.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: nginx-share-pvc

spec:

storageClassName: rook-cephfs # 指定使用的存储类

accessModes:

- ReadWriteMany # 允许多个 Pod 以读写方式访问

resources:

requests:

storage: 1Gi # 请求的存储大小

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

spec:

selector:

matchLabels:

app: nginx # 选择器,用于匹配 Pod 的标签

replicas: 3 # 副本数量

template:

metadata:

labels:

app: nginx # Pod 的标签,必须与选择器匹配

spec:

containers:

- name: nginx

image: registry.cn-guangzhou.aliyuncs.com/caijxlinux/nginx:v1.15.1

imagePullPolicy: IfNotPresent # 当本地存在镜像时不拉取

ports:

- containerPort: 80 # 容器暴露的端口

name: web

volumeMounts:

- name: www # 挂载卷的名称

mountPath: /usr/share/nginx/html # 挂载路径

volumes:

- name: www # 卷的名称

persistentVolumeClaim:

claimName: nginx-share-pvc # 引用之前定义的 PVC[root@master-01 cephfs]# kubectl exec -it web-85fff65f5f-n9fq9 -- bash

root@web-85fff65f5f-n9fq9:/# echo 'share file' > /usr/share/nginx/html/index.html

root@web-85fff65f5f-n9fq9:/# exit

exit[root@master-01 cephfs]# kubectl exec -it web-85fff65f5f-sb58k -- cat /usr/share/nginx/html/index.html

share file动态扩容

配置PVC的扩容,在此之前需要检查几个参数:1、文件共享类型的 PVC 扩容需要 k8s 1.15+。2、块存储类型的 PVC 扩容需要 k8s 1.16+。3、 将StorageClass的allowVolumeExpansion 字段设置为 true。

利用刚才创建的PVC,进行文件系统PVC的扩容

[root@master-01 cephfs]# kubectl edit pvc nginx-share-pvc

resources:

requests:

storage: 2Gi查看PVC状态,可以观察到PVC已经扩容为2G

[root@master-01 cephfs]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

nginx-share-pvc Bound pvc-2eb6f30e-cecf-40c8-be9d-6842665b37eb 2Gi RWX rook-cephfs <unset> 18h登录挂载PV的容器,可以观察到容器内部也已经同步扩容为2G

[root@master-01 cephfs]# kubectl exec -it web-85fff65f5f-4ktxp -- df -Th | grep ceph

10.96.180.8:6789,10.96.216.20:6789,10.96.101.242:6789:/volumes/csi/csi-vol-b4a01593-7d9e-4e81-9c01-feb4bbab5d2c/c0fe46bb-5368-4d16-b5a8-6b92e4ec3765 ceph 2.0G 0 2.0G 0% /usr/share/nginx/html利用auto-claim创建的PV,对块存储进行扩容

[root@master-01 ~]# kubectl edit pvc auto-claim

resources:

requests:

storage: 3Gi重新查看PV和PVC,可以观察到PV已经扩容,但是PVC大小仍保持不变(pvc 和 pod 里面的状态会有延迟, 大概等待 5-10 分钟后,即可完成扩容),同时dashboard界面也已经同步扩容为3G

[root@master-01 ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

pvc-03606cc2-6282-4ef1-99bd-413c6cc06289 3Gi RWO Delete Bound default/auto-claim rook-ceph-block <unset> 3m32s[root@master-01 ~]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

auto-claim Bound pvc-03606cc2-6282-4ef1-99bd-413c6cc06289 2Gi RWO rook-ceph-block <unset> 3m35s查看csi驱动

[root@master-01 ~]# kubectl get csidrivers.storage.k8s.io

NAME ATTACHREQUIRED PODINFOONMOUNT STORAGECAPACITY TOKENREQUESTS REQUIRESREPUBLISH MODES AGE

rook-ceph.cephfs.csi.ceph.com true false false <unset> false Persistent 25h

rook-ceph.rbd.csi.ceph.com true false false <unset> false Persistent 25hPVC快照

切换目录,针对块存储类型创建 snapshotClass

[root@master-01 ~]# cd /root/rook/deploy/examples/csi/rbd/

[root@master-01 rbd]# kubectl create -f snapshotclass.yaml

volumesnapshotclass.snapshot.storage.k8s.io/csi-rbdplugin-snapclass created查看snapshotClass资源

[root@master-01 rbd]# kubectl get volumesnapshotclasses.snapshot.storage.k8s.io

NAME DRIVER DELETIONPOLICY AGE

csi-rbdplugin-snapclass rook-ceph.rbd.csi.ceph.com Delete 53s修改pod.yaml文件的镜像拉取地址

[root@master-01 rbd]# cat pod.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: csirbd-demo-pod

spec:

containers:

- name: web-server

image: registry.cn-guangzhou.aliyuncs.com/caijxlinux/nginx:v1.15.1

volumeMounts:

- name: mypvc

mountPath: /var/lib/www/html

volumes:

- name: mypvc

persistentVolumeClaim:

claimName: rbd-pvc

readOnly: false

[root@master-01 rbd]# cat pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: rbd-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: rook-ceph-block创建资源并查看资源状态

[root@master-01 rbd]# kubectl get pods

NAME READY STATUS RESTARTS AGE

csirbd-demo-pod 1/1 Running 0 45s[root@master-01 rbd]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

pvc-a7da1348-9661-4c8e-a04f-8001fab23611 1Gi RWO Delete Bound default/rbd-pvc rook-ceph-block <unset> 117s[root@master-01 rbd]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

rbd-pvc Bound pvc-a7da1348-9661-4c8e-a04f-8001fab23611 1Gi RWO rook-ceph-block <unset> 2m进入容器,在挂载的目录下写入文件,以便验证快照效果

[root@master-01 rbd]# kubectl exec -it csirbd-demo-pod -- bash

root@csirbd-demo-pod:/# cd /var/lib/www/html/

root@csirbd-demo-pod:/var/lib/www/html# mkdir {1..10}

root@csirbd-demo-pod:/var/lib/www/html# ls

1 10 2 3 4 5 6 7 8 9 lost+found

root@csirbd-demo-pod:/var/lib/www/html# exit

exit通过snapshot.yaml文件创建PVC快照,并查看快照状态

[root@master-01 rbd]# kubectl create -f snapshot.yaml

volumesnapshot.snapshot.storage.k8s.io/rbd-pvc-snapshot created[root@master-01 rbd]# kubectl get volumesnapshots

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE

rbd-pvc-snapshot true rbd-pvc 1Gi csi-rbdplugin-snapclass snapcontent-c443696e-f85f-4ba1-8caf-b0b01b9faccb 92s 93s查看与 VolumeSnapshots 相关联的底层快照内容对象

[root@master-01 rbd]# kubectl get volumesnapshotcontents.snapshot.storage.k8s.io

NAME READYTOUSE RESTORESIZE DELETIONPOLICY DRIVER VOLUMESNAPSHOTCLASS VOLUMESNAPSHOT AGE

snapcontent-c443696e-f85f-4ba1-8caf-b0b01b9faccb true 1073741824 Delete rook-ceph.rbd.csi.ceph.com csi-rbdplugin-snapclass rbd-pvc-snapshot 2m52s通过pvc-restore.yaml文件创建PVC,该PVC的数据源来自刚才创建的快照

[root@master-01 rbd]# kubectl create -f pvc-restore.yaml

persistentvolumeclaim/rbd-pvc-restore created

[root@master-01 rbd]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

rbd-pvc Bound pvc-a7da1348-9661-4c8e-a04f-8001fab23611 1Gi RWO rook-ceph-block <unset> 25m

rbd-pvc-restore Bound pvc-115b0e9f-d367-4b83-9942-dfe95f079116 1Gi RWO rook-ceph-block <unset> 3s

[root@master-01 rbd]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

pvc-115b0e9f-d367-4b83-9942-dfe95f079116 1Gi RWO Delete Bound default/rbd-pvc-restore rook-ceph-block <unset> 7s

pvc-a7da1348-9661-4c8e-a04f-8001fab23611 1Gi RWO Delete Bound default/rbd-pvc rook-ceph-block <unset> 25m编写pod-restore.yaml文件,挂载该PVC,观察数据是否正确

[root@master-01 rbd]# vim pod-restore.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: restore-pod

spec:

containers:

- name: web-server

image: registry.cn-guangzhou.aliyuncs.com/caijxlinux/nginx:v1

volumeMounts:

- name: snapshot

mountPath: /var/lib/www/html

volumes:

- name: snapshot

persistentVolumeClaim:

claimName: rbd-pvc-restore

readOnly: false

[root@master-01 rbd]# kubectl create -f pod-restore.yaml

pod/restore-pod created登录容器,切换到挂载目录,可以观察到与快照的环境一致

[root@master-01 rbd]# kubectl get pods

NAME READY STATUS RESTARTS AGE

csirbd-demo-pod 1/1 Running 0 30m

restore-pod 1/1 Running 0 29s

[root@master-01 rbd]# kubectl exec -it restore-pod -- ls /var/lib/www/html

1 10 2 3 4 5 6 7 8 9 lost+found文件存储的快照方法与块存储一致,此处不再赘述,下面给出官网地址供读者参考

https://rook.io/docs/rook/latest/Storage-Configuration/Ceph-CSI/ceph-csi-snapshot/#verify-cephfs-snapshot-creationPVC克隆

PVC的克隆功能与PVC快照基本一致,可以实现数据备份和恢复、应用的快速部署(mysql、nginx)、简化管理等

通过pvc-clone.yaml文件创建PVC克隆

[root@master-01 rbd]# vim pvc-clone.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: rbd-pvc-clone

spec:

storageClassName: rook-ceph-block

dataSource:

name: rbd-pvc

kind: PersistentVolumeClaim

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi查看克隆后生成的PVC资源,注意: pvc-clone.yaml 的 dataSource 的 name 是被克隆的 pvc 名称,在此是 rbd-pvc,storageClassName 为新建 pvc 的 storageClass 名称,storage 不能小于之前 pvc 的大小

[root@master-01 rbd]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

rbd-pvc Bound pvc-a7da1348-9661-4c8e-a04f-8001fab23611 1Gi RWO rook-ceph-block <unset> 43m

rbd-pvc-clone Bound pvc-b10ee535-e5e3-4a5f-b662-fa3ee98ceaa9 1Gi RWO rook-ceph-block <unset> 3s

rbd-pvc-restore Bound pvc-115b0e9f-d367-4b83-9942-dfe95f079116 1Gi RWO rook-ceph-block <unset> 17m

[root@master-01 rbd]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

pvc-115b0e9f-d367-4b83-9942-dfe95f079116 1Gi RWO Delete Bound default/rbd-pvc-restore rook-ceph-block <unset> 18m

pvc-a7da1348-9661-4c8e-a04f-8001fab23611 1Gi RWO Delete Bound default/rbd-pvc rook-ceph-block <unset> 44m

pvc-b10ee535-e5e3-4a5f-b662-fa3ee98ceaa9 1Gi RWO Delete Bound default/rbd-pvc-clone rook-ceph-block <unset> 100s文件存储的克隆方法与块存储一致,此处不再赘述,下面给出官网地址供读者参考

https://rook.io/docs/rook/latest/Storage-Configuration/Ceph-CSI/ceph-csi-volume-clone/#verify-rbd-volume-clone-pvc-creation删除Rook和Ceph集群以及数据擦除

1、删除创建的Pod、PV、PVC、Snapshot资源

2、删除创建的Pool资源

kubectl delete -n rook-ceph cephblockpool replicapool

kubectl delete -n rook-ceph cephfilesystem myfs3、删除storageclass资源

kubectl delete storageclass rook-ceph-block

kubectl delete -f csi/cephfs/kube-registry.yaml

kubectl delete storageclass csi-cephfs4、配置Ceph集群的删除策略

kubectl -n rook-ceph patch cephcluster rook-ceph --type merge -p '{"spec":{"cleanupPolicy":{"confirmation":"yes-really-destroy-data"}}}'5、删除Ceph集群和确认是否删除完成,只有提示No resources found in rook-ceph namespace才能进行下一步操作

kubectl -n rook-ceph delete cephcluster rook-ceph

kubectl -n rook-ceph get cephcluster6、删除Rook operator、RBAC、CRDs和rook-ceph命名空间(删除顺序不能调换)

kubectl delete -f operator.yaml

kubectl delete -f common.yaml

kubectl delete -f crds.yaml7、如果长时间还没完成删除操作,则代表还有资源未删除彻底,请按照官网提示执行删除CRD的聚合命令以及查询集群内是否还有残留的资源

for CRD in $(kubectl get crd -n rook-ceph | awk '/ceph.rook.io/ {print $1}'); do

kubectl get -n rook-ceph "$CRD" -o name | \

xargs -I {} kubectl patch -n rook-ceph {} --type merge -p '{"metadata":{"finalizers": []}}'

donekubectl api-resources --verbs=list --namespaced -o name \

| xargs -n 1 kubectl get --show-kind --ignore-not-found -n rook-ceph给出官网地址供读者参考

https://rook.io/docs/rook/latest/Getting-Started/ceph-teardown/#troubleshooting8、编写脚本,擦除硬盘数据。注意:此脚本只在数据节点执行(master-03、node-01、node-02)如果没有执行此步骤,重新搭建Rook集群后,Ceph集群创建osd

#填写在cluster.yaml文件内初始化的磁盘名称

DISK="/dev/sdX"

# 将磁盘恢复到全新、可用的状态(全部恢复非常重要,因为 MBR 必须是干净的)

sgdisk --zap-all $DISK

# 擦除磁盘开头的100M内容,以删除可能存在的更多 LVM 元数据

dd if=/dev/zero of="$DISK" bs=1M count=100 oflag=direct,dsync

# 使用 blkdiscard 而不是 dd 可能更适合清理 SSD

blkdiscard $DISK

# 通知操作系统分区表发生变化

partprobe $DISK附:通过阿里云和Github克隆镜像

原理:通过GitHub的Dockerfile文件指引阿里云的海外机器拉取镜像进行镜像构建并推送到阿里云容器仓库

1、前置条件:需要创建阿里云仓库和Github账号

2、登录Github,创建仓库,此处以拉取Docker官方的MYSQL镜像为例

3、填写仓库名称、设置仓库为私有、添加README文件,最后创建仓库

4、进入新建的仓库,依次点击Add file→Create new file,创建的文件名为Dockerfile,内容填写格式

FROM 需要拉取的镜像地址

5、提交更改后,Commit changes选项保持默认,点击Commit changes,完成修改

6、登录阿里云容器镜像服务控制台,创建同名仓库

7、选择命名空间,仓库名称与拉取的镜像名称同名,仓库类型选择公开(方便拉取,也可以设置有私有),随后点击下一步

8、代码源选择GitHub,选择命名空间和刚才创建的仓库,勾选海外机器构建选项,点击创建镜像仓库

9、完成后会自动跳转到mysql镜像仓库,点击左侧导航栏的构建按钮,进入构建界面,点击添加规则按钮,选择类型为Branch,Branch/Tag选择main,Dockerfile文件名保持默认(GitHub创建的文件名),并填写镜像版本,最后点击确定按钮

10、点击立即构建按钮,下方会出现构建日志,构建完成后可以查看日志(检查拉取的海外镜像是否正确)

11、构建完成后,可以在左侧导航栏查看镜像版本

12、验证结果

[root@master-01 rbd]# ctr image pull registry.cn-guangzhou.aliyuncs.com/caijxlinux/mysql:5.6

registry.cn-guangzhou.aliyuncs.com/caijxlinux/mysql:5.6: resolved |++++++++++++++++++++++++++++++++++++++|

manifest-sha256:de7496e4879d4efdf89f28aff2f9a75c12495903bbd64b25799fdae73a1d6015: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:1f6637f4600d1512fddfb02f66b02d932c7ee19ff9be05398a117af5eb1cbfda: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:8ecf24da337b205e39e3a9074b7862695d1e023ae9dbfeac8401c2c7042bfee8: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:35b2232c987ef3e6249ed229afcca51cd83320e08f6700022f4a3644a11f00f2: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:fc55c00e48f2fdde6d83ae9d26de0ad33b91d6861f3e6f356633922f00ca33e0: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:0030405130e3a248f40e6465e6667e3926cb8b0a8f7e558772ca6a0c58ea9c0e: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:e1fef7f6a8d192093b8b7c303388112ca9ace69c45690f801dedb435bb4d1327: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:1c76272398bb61b196a14a8eacdee77dd3337638b3eddf3815107fa6fdb507e6: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:f57e698171b646d432b1ffdf81ea1983278fe79ff560e5a1d12ccf0fe15bdd3d: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:f5b825b269c0c5e254d28469fcd84eeab4d9eff0817bf38562ec1a1529b3f29c: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:dcb0af686073bf5f2a8f12afa8fcb2c73866ec7465d0fe67c08f5146f9aa186c: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:27bbfeb886d15ce8628fc7b6e1ac047287dde0863e0ae1e01f61e61390742740: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:6f70cc8681456d52b0c4c2d10571c2919c14afc8218ba68428197aa298fd3c5f: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 20.0s total: 98.2 M (4.9 MiB/s)

unpacking linux/amd64 sha256:de7496e4879d4efdf89f28aff2f9a75c12495903bbd64b25799fdae73a1d6015...

done: 11.340034411s

[root@master-01 rbd]# ctr images list

REF TYPE DIGEST SIZE PLATFORMS LABELS

registry.cn-guangzhou.aliyuncs.com/caijxlinux/mysql:5.6 application/vnd.docker.distribution.manifest.v2+json sha256:de7496e4879d4efdf89f28aff2f9a75c12495903bbd64b25799fdae73a1d6015 98.2 MiB linux/amd64 -

叻就一个字

我不行了

此话怎讲